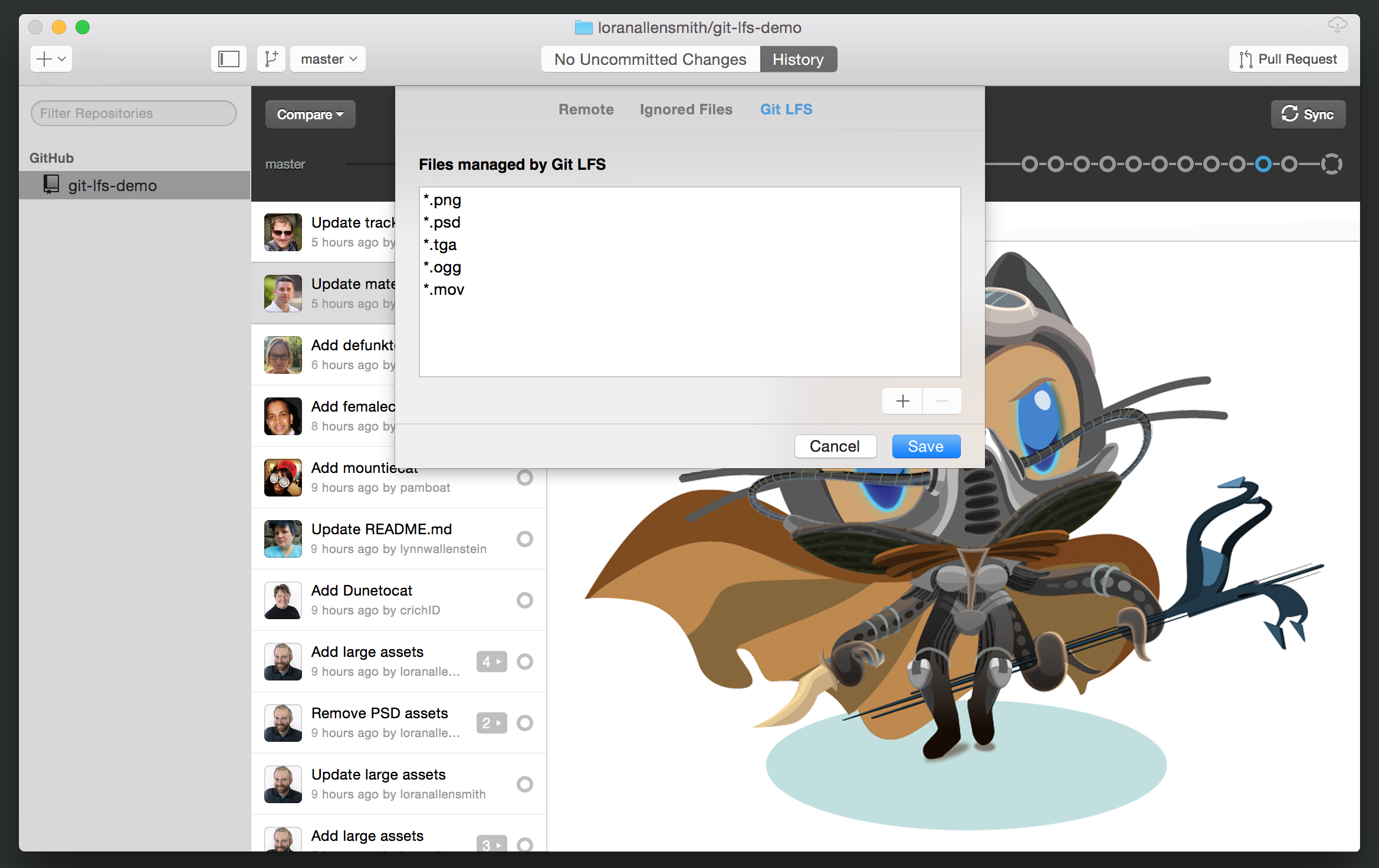

Some of it is inherent though - the hardest problem is that we now can't easily mirror the git repo from our internal gitlab to the client's gitlab because the config has to hold the http server address with the blobs in. Some of this is more implementation detail - the command line UI has some wierdness to it, there's no clear error if someone doesn't have git-lfs when cloning and so something in your build process down the line breaks with a weird error because you've got a marker file instead of the expected binary blob. Git-lfs is a hack and it has caused me pain every time I've used it, despite Gitlab having good support for it. This is fine for (compressible) text files, less fine for large binary blobs.

#Git lfs vs git annex full

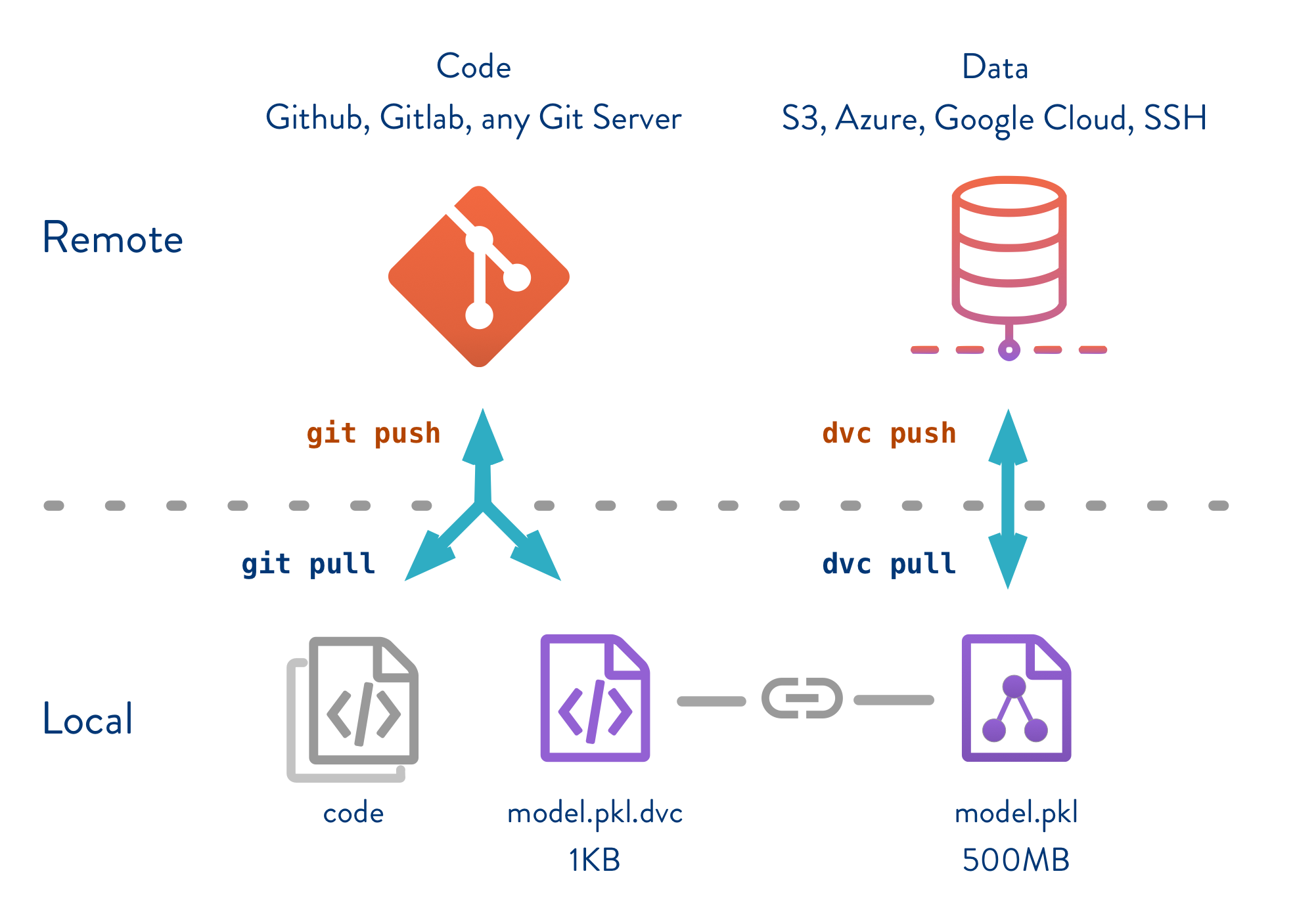

The problem is when you have 10 revisions of the 1Gb file and you end up dealing with 10Gb of data when you only want one, because the default git clone model is to give you the full history of everything since the beginning of time. If you just have one revision of it, you get a 1Gb repo, and things run at a reasonable speed. The problem with large files is not so much that putting a 1Gb file in Git is a problem. Shallow clones (see the -depth argument: ) Some combination of the following two features: maybe you could somehow maintain a duplicate shadow revision history and transparently intercept syscalls?), but the approaches I can think of all have pretty hefty downsides and feel even more like hacks than the current crop of tools. The thing I'm more curious about is I don't immediately see how large file support in git (or mercurial), whether implemented as a separate tool or natively, could ever feasibly be "transparently erasable," that is rewindable back to be absolutely identical to a repository with no large files support without rewriting revision history. However, my impression is that in fact largefiles is basically the only game in town and Mercurial LFS if anything is meant to be even more like Git LFS to the point of being compatible with it.

So there is a chance I may get something wrong here. To preface: though I've read a fair amount about Mercurial, I can count on my fingers the number of times I've actually used a Mercurial repo and I've used largefiles only ever as a toy, so I am very much a Mercurial newbie. to move away from git-annex you can just commit the binary files directly to your git directory and then just copy them out to a separate folder whenever you go back to an old commit and re-import them.īut perhaps I'm interpreting the author incorrectly, in which case it's hard for me to see how any solution for large files in git would allow you to move back without rewriting history to an ordinary git repository without large file support. However, as long as you still have your exact binary files sitting around somewhere, you can always import them back on the fly, so e.g. Whereas with git-annex, it is true that without rewriting history, even if you disable git-annex moving forward, you'll still have symlinks in your git history. There is now always a source of truth that is inconvenient to work around when you might still have the files lying around on a bunch of different hard drives or USB drives. In particular it's pretty annoying to have to always spin up a full HTTPS server just to be able to have access to your files. The "one-way door" as I understand the article to be describing is talking about the additional layer of centralization that Git LFS brings.

If you want to completely disable use of largefiles then you still have to run `hg lfconvert` at some point. In particular, AFAICT, Mercurial requires the exact same thing as what you're pointing out.

The way that the author talks about Mercurial as not having this problem makes me think they're talking about something related but subtly different.

0 kommentar(er)

0 kommentar(er)